Explore key considerations for building production-ready ML systems with practical use cases

Introduction

Building a successful machine learning (ML) model is a significant achievement, but its true value is realized only when the model is deployed in the real world.

So, creating production-ready ML systems is crucial, yet challenging because it demands a holistic approach integrating end-to-end workflow of the model.

In this article, I’ll explore key components to consider when designing ML systems, using a personal recommendation engine as a case study.

What is Machine Learning System Design

Machine Learning (ML) system design refers to the comprehensive process of building the entire infrastructure to develop, deploy, monitor, and maintain machine learning models in a production (live) environment.

This process creates an ecosystem around models, making sure that the entire system achieves the following core principles:

- Reliability: Functioning consistently even under some failures.

- Scalability: Handling increasing amounts of data, users, and requests.

- Performance: Meeting specific requirements for prediction.

- Security: Protecting sensitive data and models.

- Maintainability: Easy to update, debug, and evolve over time.

- Cost-Efficiency: Optimizing resource usage.

Key Components in ML System Design

Since system design covers the model’s entire end-to-end workflow, we need to consider the following components:

- Problem Formulation: Defines the problem and data.

- Model Development: Chooses and trains right models.

- Deployment : Makes models accessible in production.

- Deployment Method: Chooses inference types and serving platforms.

- Scalability: Ensures the system can handle increasing load and failures.

- Security: Protects data, models, API endpoints if any.

4. Maintenance (MLOps):

- Reproducibility: Versions data and models to recreate results.

- Monitoring: Tracks the system performance and sends alerts.

- Automation: Automates optimization process for stable performance.

5. Feedback Loops: Continuously retrains and deploys models based on the insights from the maintenance process.

Why ML System Design is Important

Without solid ML system design, even the most accurate machine learning model can fail in production.

Common challenges that the system faces in production are:

- model degradation,

- performance bottleneck, and

- lack of proper monitoring to alert events to be managed.

Model Degradation

Model degradation refers to a decline in model’s performance over time after the deployment, directly impacting the business value that the model is supposed to deliver.

Major causes of the model degradation are concept drift and data drift.

- Concept drift: The relationship between input data and target variables changes over time, making the model’s prediction inaccurate.

- Data drift: The properties of input data change over time, making them different from what the model was originally trained on.

For instance, a model trained to predict churn learns the login_frequency as one of the influential features to flag churn risks.

Last month, a new marketing campaign introduced a 14-day free trial for new users.

Due to many new users, churn is now strongly influenced by engagement during the free trial, making the model’s predictions worse.

This is concept drift because the concept of churn itself has shifted due to the unforeseen event (the new campaign), making the model’s reliance on login_frequency significantly ineffective.

On the other hand, in a separate case, let’s say the model learns less than 5 logins during the first 14 days as a threshold to trigger churn.

But due to the campaign, the vast majority of new users start to login more than 40 times in the first 14 days, making the threshold no longer accurate.

This is data drift because the concept of churn itself didn’t change, but the data distribution of login_frequency has drastically shifted due to the new campaign.

Performance Bottleneck

A performance bottleneck occurs when the system cannot handle the increasing volume of data, users, or requests, leading to its degradation and poor user experiences.

For example, a real-time product recommendation engine built for 1,000 requests per second suddenly receives 100,000 requests per second during a holiday sale.

The underlying infrastructure was not designed to scale horizontally, so the recommendation service became unresponsive, frustrating shoppers.

In consequence, the company lost potential sales.

Lack of Proper Monitoring

Lack of monitoring refers to the absence of the right processes to track the system performance. Without monitoring, problems go undetected until they cause significant damage.

For example, a delivery company deployed a model to optimize shipping routes, but without a proper monitoring system.

The company couldn’t realize for weeks that the model was no longer generating optimal routes due to the road construction recently started (data drift), until many customer complaints on delayed deliveries escalated to the team.

A well-designed ML system can prevent these challenges happen.

Now, let us explore the key components in ML system design one by one.

1. Problem Formulation

Machine learning projects are resource intensive.

So, before even thinking about models, we need to clearly define the business problem we’re solving and whether machine learning is the best solution.

Asking ourselves questions like:

- What core problems are we trying to solve?

- Why do we need to use machine learning to solve the problem? Is it worth it?

- What metrics would define the success for both business and models? Do they align well?

would help define clear objectives and problem statements.

The Feasibility Check: Data Collection and Preparation

This phase also includes data collection and preparation because data availability and its quality dictate whether building models is the best solution for the problem.

If we don’t have enough high-quality data relevant to the problem, even the most sophisticated algorithms won’t perform well.

In such case, applying a simpler, rule-based system or even a human-driven process can be a better solution.

This process is most time-consuming because we need to:

- Understand data sources (merging multiple data sources, transforming unstructured data),

- Augment or acquire data if needed,

- Ensure data quality,

- Handle missing values, and

- Perform feature engineering.

2. Model Development

Once we’re confident in the value and feasibility of machine learning projects, we’ll select and train models tailored to the specific problem.

The key components include:

- Algorithm Choice: Select an algorithm appropriate for the problem/data types,

- Model Training and Validation: Establish training and validation pipelines and assess generalization performance.

- Hyperparameter Tuning: Systematically optimize model hyperparameters for optimal performance.

Each step has numerous techniques and points to consider. I’ll list up relevant articles:

- Master Hyperparameter Tuning in Machine Learning

- Achieving Accuracy in Machine Learning

3. Deploying Machine Learning Models

After building models, we need to deploy them in production for clients like users, downstream services, or APIs.

In this section, I’ll focus on practical decisions to make during the deployment phase, breaking down the key considerations into three areas:

- Choosing deployment methods: How will the model deliver predictions?

- Designing for scalability & reliability: Can the system handle the load and recover from issues?

- Implementing security: Is the model and data protected?

3–1. Choosing Deployment Methods

When choosing deployment methods, there are two points to consider:

- Inference types and

- Serving platforms.

Inference Types

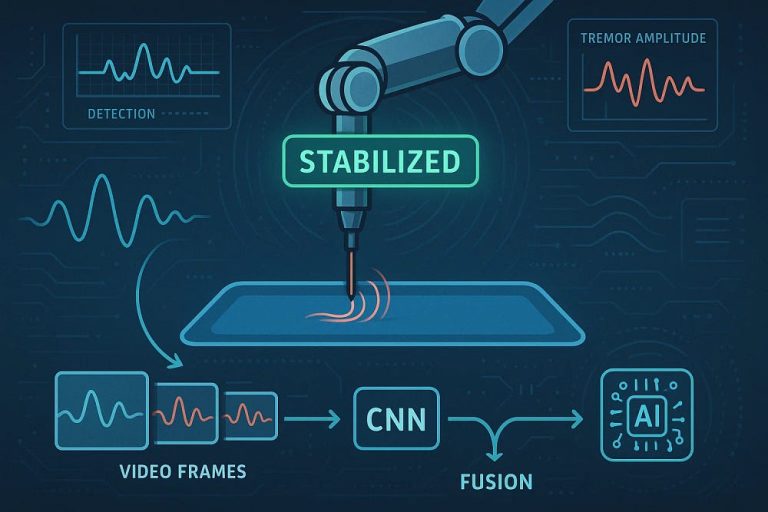

In the context of machine learning, inference refers to the process of using a trained model to make predictions on new, unseen data.

Primarily, inference types are categorized into batch inference and real-time inference.

Our choice depends on when we need predictions.

+ There are no comments

Add yours