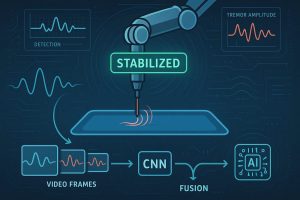

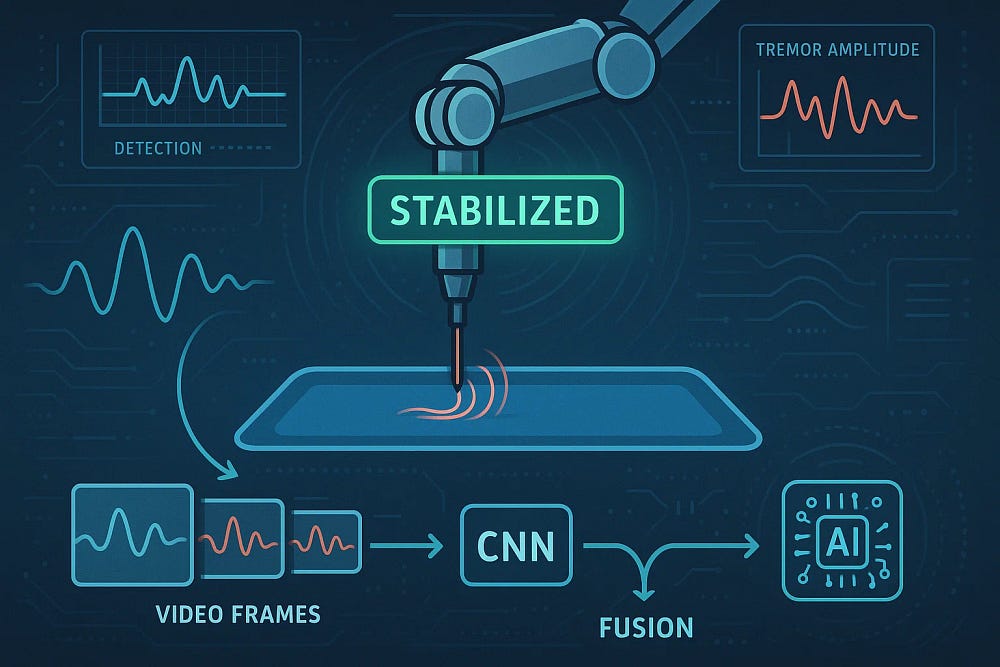

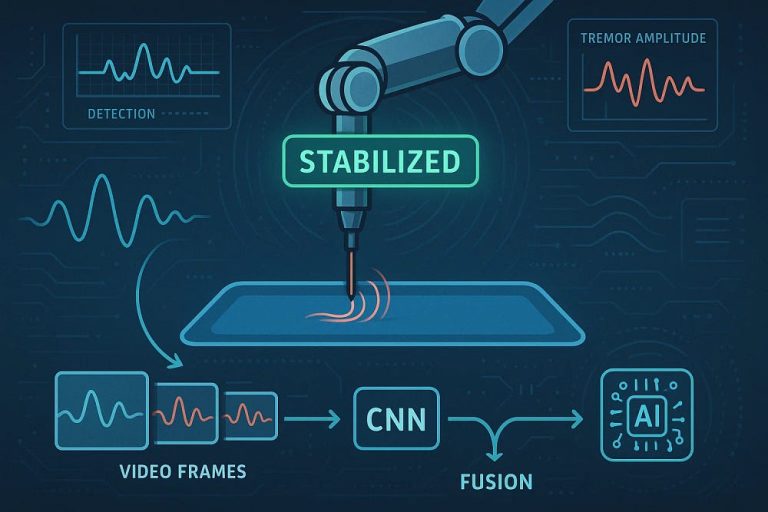

Develop an AI system that detects fine tremors in robotic surgical tools using video and accelerometer (IMU) data, enabling real-time correction feedback and precision overlay.

Input Modalities

1. Tool Movement Video Feed

- High-frame-rate endoscopic or external video of robotic arm

- Extracted frames → CNN input

2. Accelerometer / IMU Data

- Sampled at high frequency (100–200Hz)

- 3-axis data:

[acc_x, acc_y, acc_z] - Synced with video timestamps

Project Structure

bash

TremorDetection/

├── data/

│ ├── imu/ # CSV/TSV with accelerometer readings

│ └── videos/ # Robotic tool movement clips

├── models/

│ ├── cnn_model.py

│ ├── lstm_model.py

│ └── fusion_model.py

├── utils/

│ ├── fft_analysis.py

│ └── sync_tools.py

├── scripts/

│ ├── train_fusion.py

│ ├── infer_realtime.py

│ └── visualize_overlay.py

└── dashboard/

└── tremor_ui.py

Step 1: IMU Preprocessing + FFT

python

# utils/fft_analysis.py

import numpy as np

from scipy.fftpack import fft

def extract_fft_features(signal, fs=200):

"""Return FFT magnitude and dominant frequency."""

N = len(signal)

freqs = np.fft.fftfreq(N, d=1/fs)

fft_vals = np.abs(fft(signal))

dom_freq = freqs[np.argmax(fft_vals[1:N//2])]

return dom_freq, fft_vals

Step 2: CNN for Visual Tremor Detection

python

# models/cnn_model.py

import torch.nn as nn

class TremorCNN(nn.Module):

def __init__(self):

super().__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 16, 5, stride=2),

nn.ReLU(),

nn.Conv2d(16, 32, 3),

nn.ReLU(),

nn.Flatten()

)

self.classifier = nn.Sequential(

nn.Linear(32*29*29, 128),

nn.ReLU(),

nn.Linear(128, 2) # Tremor/No Tremor

)

def forward(self, x):

x = self.features(x)

return self.classifier(x)

Step 3: LSTM for Accelerometer Tremor Classification

python

# models/lstm_model.py

import torch.nn as nn

class IMULSTM(nn.Module):

def __init__(self, input_size=3, hidden_size=64):

super().__init__()

self.lstm = nn.LSTM(input_size, hidden_size, batch_first=True)

self.classifier = nn.Sequential(

nn.Linear(hidden_size, 64),

nn.ReLU(),

nn.Linear(64, 2)

)

def forward(self, x):

_, (h, _) = self.lstm(x)

return self.classifier(h[-1])

Step 4: Fusion Architecture

python

# models/fusion_model.py

class FusionModel(nn.Module):

def __init__(self, cnn, lstm):

super().__init__()

self.cnn = cnn

self.lstm = lstm

self.fc = nn.Sequential(

nn.Linear(4, 64),

nn.ReLU(),

nn.Linear(64, 2)

)

def forward(self, image, imu):

cnn_out = self.cnn(image) # shape: (B, 2)

lstm_out = self.lstm(imu) # shape: (B, 2)

fused = torch.cat([cnn_out, lstm_out], dim=1)

return self.fc(fused)

Step 5: Real-Time Feedback Logic

python

# scripts/infer_realtime.py

import cv2

import serial

from models.fusion_model import FusionModel

cap = cv2.VideoCapture(0)

imu_data_buffer = []

model.eval()

while True:

ret, frame = cap.read()

imu_vector = get_realtime_imu_data()

tremor_prob = model(frame, imu_vector)

if tremor_prob[0,1] > 0.7:

print("⚠️ Tremor Detected!")

send_signal_to_robot_controller()

Step 6: Visual Overlay of Instability

python

# scripts/visualize_overlay.py

import matplotlib.pyplot as plt

def overlay_tremor(frame, prob):

label = "Tremor" if prob > 0.5 else "Stable"

color = (0,0,255) if prob > 0.5 else (0,255,0)

cv2.putText(frame, label, (20, 30), cv2.FONT_HERSHEY_SIMPLEX, 1, color, 2)

return frame

Optional: Streamlit Dashboard

python

# dashboard/tremor_ui.py

import streamlit as st

st.title("🔬 Real-Time Tremor Detection")

video = st.file_uploader("Upload Tool Video")

imu = st.file_uploader("Upload IMU CSV")

if video and imu:

st.success("Data Uploaded! Run model below 👇")

if st.button("Run Detection"):

st.video(video)

st.line_chart(imu_df["acc_z"])

Evaluation Metrics

- Tremor Recall (no false negatives)

- Precision (avoid over-triggering)

- Temporal Resolution (detection within <100 ms)

Advanced Ideas

- Use SlowFast for video tremor modeling

- Add Kalman filter for IMU noise smoothing

- Generate Tremor Heatmaps on tool tip trajectory

- Integrate into ROS2 + MoveIt for real-time correction

+ There are no comments

Add yours